Blogs

Revolutionary Event Cameras That Capture What Traditional Cameras Miss

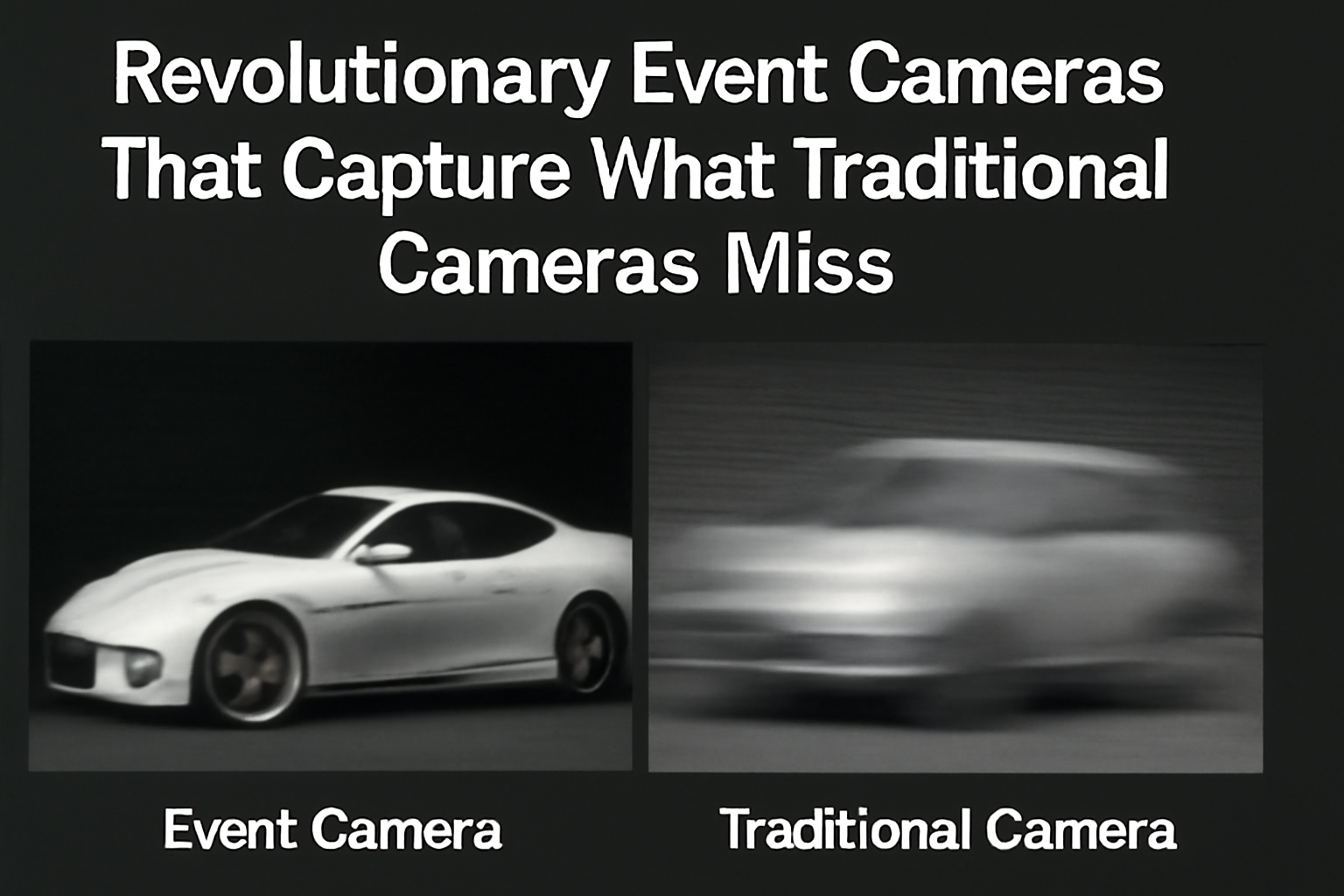

In high-speed and high-contrast environments such as autonomous driving, robotics, industrial inspection and motion capture, the core challenge is “how to see clearly and respond fast.” Conventional frame-based cameras record full images at fixed rates, and when confronted with high-speed motion or extreme lighting, they often suffer from motion blur, over-exposure/under-exposure, and large latency. Event cameras (also known as Dynamic Vision Sensors, DVS/DAVIS/EVS) employ a paradigm of asynchronous, per-pixel brightness change triggering rather than full-frame exposure, thus inherently offering ultra-high temporal resolution, very high dynamic range (HDR), low latency, and low bandwidth/power. Survey literature and major manufacturer datasheets indicate that event cameras deliver substantially better performance in HDR (> 120 dB) and micro‐second‐level responsiveness compared to traditional frame cameras (~60 dB, millisecond latency).

1. Working-principle differences: from frames to events

- Conventional cameras (Frame-based): They capture whole frames at a fixed exposure period (e.g., 30/60/120 fps). During each exposure all pixels integrate light simultaneously. In scenarios with fast motion, significant displacement may occur during exposure leading to motion blur; in scenes with high contrast or low light, details can be lost due to limited dynamic range or noise.

- Event cameras (Event-based): Each pixel operates independently and monitors changes in log-intensity; when the brightness change surpasses a threshold, the pixel immediately emits an “event” (with pixel coordinate, timestamp, polarity). There is no need to wait for full‐frame exposure, enabling asynchronous, sparse, yet temporally very dense output. The design is inspired by the retina, giving pixels independence and a contrast‐change trigger, endowing the sensor with high dynamic range and very low latency.

Key point: An event camera isn’t merely a “higher frame rate” version of a conventional camera; it represents a fundamentally different data acquisition paradigm. This difference underpins the large performance gap in extreme conditions.

2. Key metrics – head-to-head comparison

Dynamic Range (HDR)

- Conventional Cameras: High-quality frame sensors typically achieve dynamic ranges around ~60 dB, which often limits performance in cases of strong back-lighting, night scenes with on-coming headlights, welding sparks, etc.

- Event Cameras: Many commercially available devices specify dynamic ranges greater than 120 dB, some up to ~140 dB, covering a vast span from moonlight to daylight.

For example: the joint Sony/Prophesee IMX636 sensor indicates <100 µs pixel latency at 1000 lux, with dynamic range >86 dB (in a 5 lux–100 klux measurement) and >120 dB (in 80 mlux–100 klux measurement).

Temporal Resolution & System Latency

- Conventional Cameras: Frame rate is limited (tens to hundreds fps), and system latency is normally in the millisecond range. When the target moves quickly, image blur or inter‐frame motion occurs.

- Event Cameras: They offer microsecond time‐stamps (e.g., tens of µs to a few hundreds µs) and pixel‐level triggering means events are reported almost immediately. One device (DAVIS346) lists a minimum latency of ~20 µs, typical <1 ms.

Motion Blur

- Conventional Cameras: Motion blur arises from the time integration of the exposure; even if you shorten exposure you sacrifice light and increase noise or under-exposure.

- Event Cameras: Because no global exposure is needed and output is triggered by change rather than integral, motion blur is negligible or absent, especially beneficial in high-speed or vibrating platforms.

Bandwidth & Power

- Conventional Cameras: They continuously output full frames even if the scene is static, producing redundant data and consuming more bandwidth/power.

- Event Cameras: They output only when change occurs; in a static or low‐motion scene the sensor is almost silent, which means native compression at sensor level, lower bandwidth and lower power.

Some event sensors provide on‐chip features like event‐rate control, anti‐flicker filtering, and embedded processing (e.g., Prophesee GENX320 lists dynamic range >140 dB, pixel latency <150 µs, with anti‐flicker/event‐rate control).

3. Real-world benefits in extreme lighting and high-speed motion

Scenario A: Night / Strong Back-lighting (Headlights, Tunnel Entry)

Conventional cameras struggle to capture both bright highlights and dark shadows, often losing detail in overexposed or underexposed regions. Event cameras, with >120 dB dynamic range and contrast‐change triggering, can reliably produce event streams under such dramatic lighting conditions—preserving edges and motion cues essential for navigation/tracking.

Scenario B: High-Speed Motion (Drone maneuvers, Machine-arm/Conveyor)

Large displacement per frame causes traditional cameras to blur or skip information between frames. Event cameras, thanks to their microsecond‐level time resolution, output events that track edge changes almost instantaneously—making them well suited for low-latency control loops and high-speed defect detection in moving systems.

Scenario C: Welding / Sparks / Flickering Light Sources

Strong flicker or very high contrast (like welding arcs) challenge conventional sensors with saturation or artifacts. Many event sensors have built‐in flicker filters, event‐rate control and high HDR enabling stable performance under such harsh illumination.

4. Algorithmic ecosystem & datasets: how to “use” event data

Event data is sparse, asynchronous, and temporally extremely dense in its own dimension. This demands algorithms designed for it: feature tracking, optical flow, SLAM/VO, 3D reconstruction, detection/recognition, etc. Surveys provide detailed overviews of these tasks.

Open datasets & benchmarks

- MVSEC (Multi Vehicle Stereo Event Camera Dataset): Covers vehicles/vehicles, drones, handheld; includes events, grey frames, IMU, LiDAR/ground truth; multiple lighting conditions.

- DSEC: A stereo event camera dataset for driving; high resolution, multiple times of day, paired with conventional cameras + LiDAR; used for stereo/flow/detection evaluation.

These datasets and benchmarks support development of event‐based deep learning, fusion pipelines (frames + events), and real‐world evaluation.

5. Event cameras are not universally optimal: limitations & trade-offs

- Static textures / low-contrast regions: If there is little or no brightness change, event output is minimal; this reduces information for recognition or reconstruction. Therefore, event sensors are often paired with conventional frame sensors (e.g., DAVIS integrates both events & APS frames).

- Algorithmic maturity: Because event data is asynchronous and spatially sparse, standard frame-based vision algorithms (CNNs, optical flow on images, etc.) do not directly apply. Specialized methods (event aggregations, spatio-temporal encodings, sparse convolutions, Transformers for events) are required. Surveys recognise the ecosystem is still evolving.

- Image‐style reconstruction & high-resolution texture: Reconstructing high‐fidelity intensity images purely from events remains challenging; although many methods exist, it is fundamentally an ill-posed problem requiring strong priors.

6. Hybrid & fusion approaches – the practical deployment path

Industrial deployment increasingly favours events + frames fusion: conventional frames for appearance, colour and high‐spatial resolution; events for edge/temporal/latent cues. For example, the DAVIS series integrates DVS + APS; Sony/Prophesee IMX636 acts as an event front-end, often paired with conventional sensors or processed jointly in ISP/ neural backends.

7. Sample devices & parameter references (selective)

- iniVation DAVIS346: 346×260 resolution, event dynamic range ~120 dB, minimum latency ~20 µs, event throughput up to 12 MEvents/s; also outputs APS frames.

- iniVation full device specification sheet: several models list latency <1 ms, dynamic range ~120 dB.

- Sony/Prophesee IMX636: 1280×720 resolution event sensor, latency <100 µs (1000 lux), dynamic range >86 dB (in certain test) and >120 dB (80 mlux-100 klux).

- Prophesee GENX320: Dynamic range >140 dB, pixel latency <150 µs, built‐in anti‐flicker/event‐rate control.

These illustrate the “hard numbers” behind event sensor advantages in HDR and latency.

8. Conclusion & Selection Advice

- If your scenario involves “high speed / high dynamics / extreme lighting” (e.g., agile UAV manoeuvres, on-coming headlights, welding/sparks, high‐throughput surface inspection), an event camera can significantly mitigate motion blur, over/under-exposure and latency/bandwidth issues faced by conventional cameras.

- If your task emphasizes fine colour/texture/appearance under moderate conditions (e.g., static inspection, high-resolution recognition, document imaging), a conventional frame camera remains foundational; in that case, consider a hybrid (frame + event) approach to capture both appearance and temporal/edge cues.

- For engineering deployment: evaluate whether your data‐pipeline and algorithmic stack support event processing (e.g., event aggregation windows, reconstruction or direct event processing, real‐time constraints). Use public benchmarks like MVSEC/DSEC to validate latency, accuracy and coverage before selecting hardware.

In one sentence: In the battle between “seeing clearly” and “responding in time,” event cameras deliver a fundamentally better solution for extreme lighting and high-speed motion; whereas in the broader, more conventional visual tasks, the pragmatic path is fusion of event + frame sensors. Indeed, event-based vision represents a paradigm shift in sensing, not merely a higher frame-rate camera.